The 100% Server Uptime Illusion

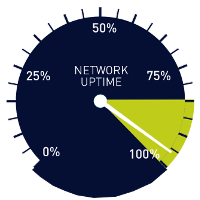

Posted on May 25th, 2010 by Victoria Pal in Monitoring, Tech You've seen 98.9%, 99.9%, and even 100% server uptime labels on hosting sites. The first thought which comes up to people is, "Well, 99.9% sounds pretty well; 0.01% is no big deal," and they are right. Many people are missing because even if the server is reachable, it doesn't necessarily mean it's fully functional. So many other things can go wrong with a server. A 99.9% uptime sticker is no guarantee that your business won't face different problems while the server is up and running. Hosting providers calculate uptime in ways that aren't intuitive from a user's perspective, not considering certain website downtime.

You've seen 98.9%, 99.9%, and even 100% server uptime labels on hosting sites. The first thought which comes up to people is, "Well, 99.9% sounds pretty well; 0.01% is no big deal," and they are right. Many people are missing because even if the server is reachable, it doesn't necessarily mean it's fully functional. So many other things can go wrong with a server. A 99.9% uptime sticker is no guarantee that your business won't face different problems while the server is up and running. Hosting providers calculate uptime in ways that aren't intuitive from a user's perspective, not considering certain website downtime.

- First of all, not all downtime is counted. Scheduled maintenance is not part of the equation. Several hours of downtime is not what hosting companies would include. When monthly server uptime is calculated, such planned service interruptions are not included (deducted).

- Some, if not most, hosting providers tend to overlook shorter downtime periods. 3-5 minutes of unavailability are not considered significant and don't end up on the 99.9% sticker. You can see how multiple short downtime periods can make a difference at the end of the day.

- 0.01% means twice the trouble. 99.9% uptime means 8 hours and 45 minutes of downtime per year. So when you go down a notch to 98.9%, your calculated downtime goes up twice to 17 hours and 30 minutes.

- Resources are limited. "Unlimited" is just a nice way to sugarcoat "enough for the majority of common users." Your business might not be a common one, and demand might be high. There might be other significant sites sharing the same hosting hardware. At one point, it can all build up to an overload, resulting in poor loading times, broken transactions, and even a website. The server will remain online, but the performance won't meet your needs. Application monitoring is a good way to quickly and easily discover such problems.

100% uptime is not feasible. Eventually, something will go wrong. Network backbones will cause problems; power failures will happen, server software and hardware will fail now and then, and human error will always be important. So, my advice is: Don't try to find the ultimate provider, as it doesn't exist. Instead, find a decent provider with good server uptime and performance, 24-hour technical support, and frequent backups. Oh, why don't you go for a nice remote website monitoring service, just in case? It might be the best money you have ever spent.

It Don't Mean a Thing If It Ain't Got That Ping

Posted on May 18th, 2010 by Victoria Pal in Tech Ping is one of the most well-known and easy-to-use computer network tools. Its basic application is to test the availability of hosts across an IP network. The ping tool was written by Mike Muuss way back in December 1983. Its practical use and reliability were undeniable until 2003, when a lot of internet service providers began filtering ICMP ping requests due to growing thread from internet worms and basic DDoS attacks. Nearly 3 decades later, ping stands tall, providing common computer users, webmasters, and network administrators with valuable information.

Ping is one of the most well-known and easy-to-use computer network tools. Its basic application is to test the availability of hosts across an IP network. The ping tool was written by Mike Muuss way back in December 1983. Its practical use and reliability were undeniable until 2003, when a lot of internet service providers began filtering ICMP ping requests due to growing thread from internet worms and basic DDoS attacks. Nearly 3 decades later, ping stands tall, providing common computer users, webmasters, and network administrators with valuable information.

Check your network adapter

Common users can use ping from their local machine to check if their LAN, WLAN card is enabled and working by simply pinging itself. The command will look something very similar to this:

Measuring Website Response Time

Posted on May 16th, 2010 by Victoria Pal in Tech The response time of a webpage is the time elapsed from the moment the user requests a URL, until the request page is fully displayed. This process can be separated in to 3 logical units - transmission, processing and rendering.

The response time of a webpage is the time elapsed from the moment the user requests a URL, until the request page is fully displayed. This process can be separated in to 3 logical units - transmission, processing and rendering.

• Transmission consists of the time to transmit the user's request and receive response from the server.

• Processing is the part of the operation where the server has to process the request and generate the response.

• Rendering is client side operation and consists of the time needed by the client machine to display the response to the user.

Importance of Website Response Time

Posted on April 29th, 2010 by Victoria Pal in Tech Why is response time so important? Would it hurt your visitors to wait just a few more seconds for your page to appear? Well, to cut a long story short - Yes, it will hurt your visitors' experience and it will hurt your site.

Why is response time so important? Would it hurt your visitors to wait just a few more seconds for your page to appear? Well, to cut a long story short - Yes, it will hurt your visitors' experience and it will hurt your site.

When you are online, every second counts. The abundance of websites on various topics, located only a few clicks away from each other is why your site can not afford to lose visitors and prospective clients over such a little thing as bad response time.

Copyright 2000-2026, WebSitePulse. All rights reserved.

Copyright 2000-2026, WebSitePulse. All rights reserved.