Page 41

WebSitePulse Chrome Extensions, Part 1

Posted on February 24th, 2014 by Robert CloseI have already reviewed our Chrome transaction recorder extension in a previous post where I explained into detail how to record a transaction using this specific extension. Now it’s time to look at our Test tools and Current Status Chrome extension, so stay tuned.

To get to Chrome web store, go to menu -> tools -> extensions or follow this link - chrome.google.com/webstore.

How to Use the Reports Section

Posted on February 17th, 2014 by Robert Close in MonitoringAn Overview

Collecting monitoring data is a significant part of our business but showing and providing this data in an easy-to-understand way is just as important to us and to our customers. We have many different levels of monitoring and thus a variety of reports clients can choose from. As our company is customer-driven, we have created more than 100 different reports that can fulfill all of our clients’ requirements.

Setting Up Blacklist Monitoring

Posted on February 13th, 2014 by Robert Close in Monitoring, Guides Have you ever wondered why some spam filters block the emails sent from your server?

Have you ever wondered why some spam filters block the emails sent from your server?

A possible reason for such problems might be that your servers are blacklisted in a DNSBL list (DNS-based Blackhole List) which the spam filters use to verify that you are not a spammer. So, to make sure the IP of your mail server is not present in most of the major blacklist services’ lists, you can use our Blacklist (DNSBL) monitoring service.

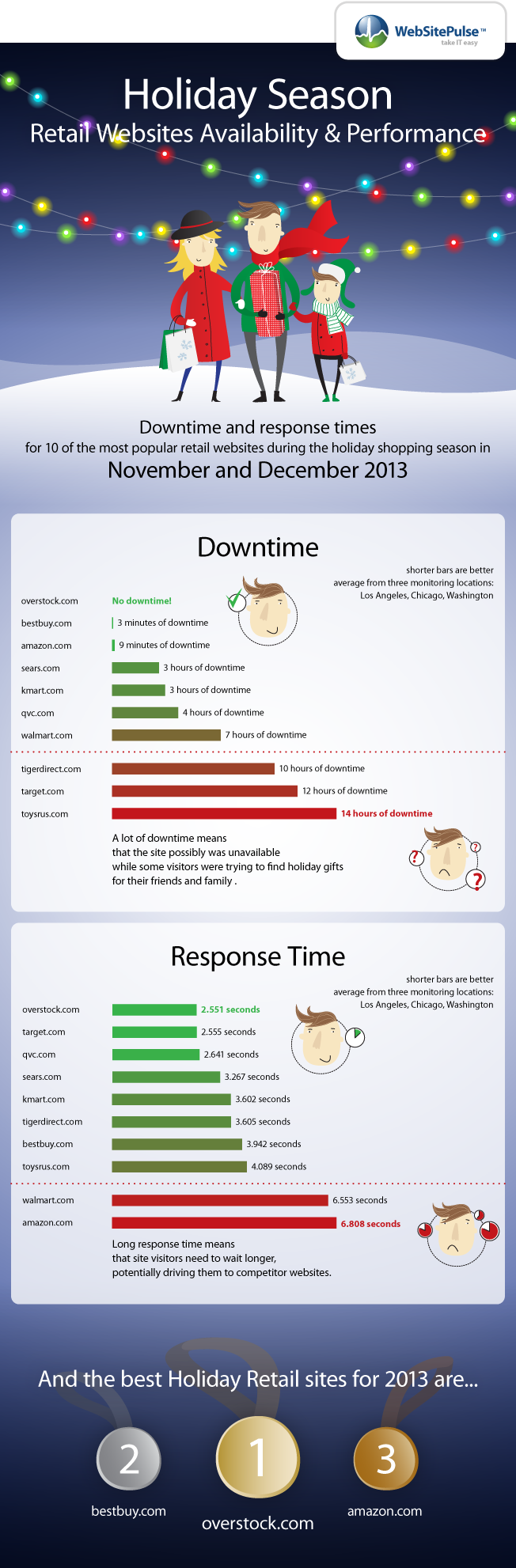

Web Performance and Availability during Holiday Season 2013, [INFOGRAPHIC]

Posted on January 27th, 2014 by Boyana Peeva in Performance Reports

Online sales during this holiday season (November 21st, 2013 – January 6th, 2014) weren’t as miraculously high as retailers were hoping for but still December sales gave a lift to the US economy.

Use Stats Publisher to Show Your Target's Uptime to Clients

Posted on January 20th, 2014 by Damien Jordan in TechIf your business largely depends on your website, you may often need to show its uptime to your customers.

As a WebSitePulse client, you have an option called ‘Stats Publisher’where you set up a public report page that includes the uptime statistics for one or more of your targets. The reports are published on www.MyWebReports.net and can be viewed by everyone, or just by the people whom you give access to.

Copyright 2000-2026, WebSitePulse. All rights reserved.

Copyright 2000-2026, WebSitePulse. All rights reserved.